ETF Network

Getting Started

- Learn about how Etf works

- See the getting started guide for an in-depth guide on running your own node.

- Build apps with etf.js

What is it?

The ETF network (“Encryption to the Future”), powered by identity based encryption and zero knoweldge proofs, is a Substrate-based blockchain that implements a novel “proof-of-extract” consensus mechanism where network authorities leak secrets over time. The initial implementation uses a fork of Aura to implement a proof-of-authority version of EtF. The network acts as a cryptographic primitive enabling timelock encryption, unlocking innovative use cases such as sealed bid auctions and voting, front-running protection, and trustless atomic MPC protocols.

Who is it for?

The ETF network is for anyone who uses the internet! In its current form, anyone can read block headers and extract secrets from the predigest, which can be used for timelock encryption or as a randomness beacon. This functionality can be added to existings applications with the etf.js library. In the future, we will extend this functionality with cross-chain bridges.

Where are we?

We’re nearing the completion of our first web3 foundation grant! In the next phase of the project, we intend to redesign our consensus mechanism to be based on proof-of-stake by implementing a proactive secret sharing scheme and integrating it with Babe, ensuring that the network can more securely scale.

This project is sponsored by a web3 foundation grant.

This project is sponsored by a web3 foundation grant.

Contact

- Join us on matrix! https://matrix.to/#/#ideal-labs:matrix.org

- https://idealabs.network

License

These docs and the code for both etf-network, etf.js, and the etf-sdk are licensed as GPlv3.0.

EtF Network Overview

The ETF Network is a blockchain built with Substrate. Its core functionality revolves around its novel consensus mechanism, “Authoritative Proof-of-Knowledge”, which uses identity based encryption and DLEQ proofs to leak a new “slot secret” within each authored block. The initial implementation is built on top of the Aura proof of authority consensus mechanism.

Check out the links below for a deep dive into the components:

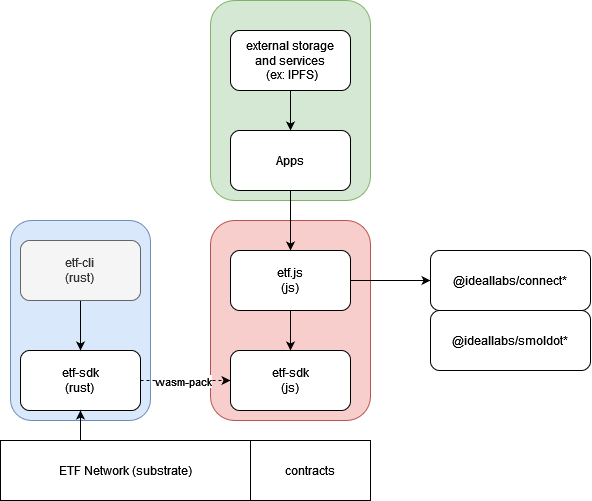

The Etf.js and Etf SDK are a modular tech stack that supports the development of various applications that use the etf network. Developers are provided with tools to verify proofs and to perform timelock encryption against the ETF network. The platform also provides a framework for builders to define and implement their own protocols on etf, enhancing the adaptability and customization potential of the network’s offerings. Currently, etf.js supports connections from (smoldot) light clients and full nodes only.

Getting Started

Since Etf Network is a substrate based blockchain, in general all commands that work with the default CLI implemented in substrate are compatible with this blockchain (i.e. for keygen).

Setup

Installation

To build the blockchain locally:

# clone substrate and checkout the etf branch

git clone git@github.com:ideal-lab5/substrate.git

cd substrate

git checkout etf

# nightly build

cargo +nightly build --release

Run

From Sources As previously stated, all default substrate commands will work. For example, to run the blockchain in dev mode as Alice, use:

./target/release/node-template --tmp --dev --alice

From Docker

The latest docker image can be found here

# pull the latest image

docker pull ideallabs/etf

# run the image

# the image accepts all substrate commands/flags

docker run -p 9944:9944 -it --rm --name etf-node-0 ideallabs/etf --unsafe-rpc-external --validator --dev --tmp

The raw chainspec for our testnet can be found here. You will need to add the --chain etfSpecTestRaw.json when running your node to connect to the testnet (contact us if any issues).

Testing

unit tests

cargo +nightly test

Benchmarks

First navigate to /bin/node-template/node/ and build it with cargo +nightly build --profile=production --features runtime-benchmarks

run benchmark tests with

cargo test --package pallet-etf --features runtime-benchmarks

Once built, generate weights against the compiled runtime with:

# list all benchmarks

./target/production/node-template benchmark pallet --chain dev --pallet "*" --extrinsic "*" --repeat 0

# benchmark the etf pallet

./target/production/node-template benchmark pallet \

--chain dev \

--wasm-execution=compiled \

--pallet pallet_etf \

--extrinsic "*" \

--steps 50 \

--repeat 20 \

--output bin/node-template/pallets/etf/src/weight.rs

EtF Network Overview

This is an overview of the ETF Network’s consensus mechanism.

- substrate based blockchain

- consensus based on aura, introduces IBE secrets and DLEQ proofs to block headers

- use a pallet to manage and update public parameters for the identity based encryption and dleq proofs

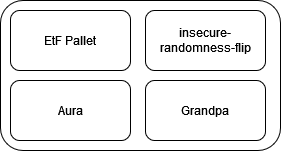

Pallets

The network requires a pallet, the etf-pallet, to function. The etf-pallet stores public parameters that are required to enable identity based encryption. These values are calculated offchain and encoded in the genesis block.

ETF Pallet

The ETF pallet stores public parameters needed for the IBE scheme. The values are set on genesis and only changeable by the root user (via the Sudo pallet) when they call the update_ibe_params extrinsic. The extrinsic uses Arkworks to decode the input to ensure that the provided data is a valid element of G2, and if so then it encodes it in storage. In the future, we intend to make this a more democratic process.

Consensus and Encryption to the Future

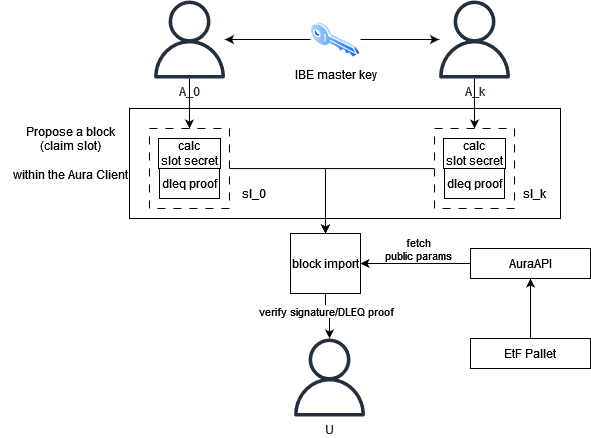

Here we present a high-level overview of how the consensus mechanism works. Essentially, the goal of our consensus mechanism is to construct a table of IBE secrets and public keys which grows at a constant rate and whose authenticity and correctness is ensured by consensus. For a deep dive into the math, jump to the math.

There are four major phases:

- Setup: IBE Setup, slot identification scheme, and blockchain genesis

- Authority Selection: Round-robin authority selection (i.e. aura)

- Claim a slot: Block authors calculate an IBE secret for the identity and corresponding DLEQ proof, and include it in the new block header

- Block verification: Block importers verify the DLEQ proof when checking the block’s validity

The initial version of the network uses a fork of Aura, a round-robin proof of authority consensus mechanism. Each authority is an IBE master key custodian, which is created in the IBE setup phase (more on this here). This requires trust in each authority, a requirement we will relax in the future.

When a slot author proposes a block, they first use the master secret to calculate a slot secret (the IBE extract algorithm), which they add to the block header. This slot secret is intended to be leaked and made public. In order to ensure the correctness of the secret to be leaked, block producers include a DLEQ proof that shows the slot secret was correctly calculated. Along with this, they also sign the block as usual.

Block importers simply verify the DLEQ proof. If the DLEQ proof is not valid, the block is rejected.

Whenever a block is authored in a slot, the slot secret can then be extracted and used. Essentially, the blockchain is building a table of IBE secrets and public keys which grows at a constant rate and whose authenticity and correctness is ensured by consensus.

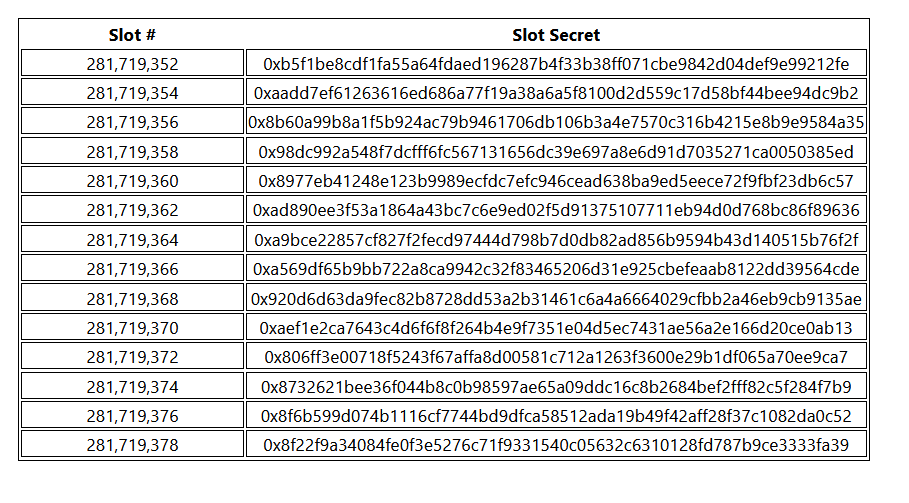

Slot Identity

In our proof-of-authority based network, there is a known set of authorities, say \(A = {A_1, …, A_n}\), from which block authors are sequentially selected (round-robin). That is, for a slot \(sl_k\), the authority to author a block in the slot is given by \(A_{sl_k} = A[sl_k \mod |A|] \). A slot’s identity is given by \(ID_{sl_k} = sl_k\). We simply use the slot number as the slot identity. For example, a slot id could look like 0x231922012, where 231,922,012 is the slot number. We preserve the authoritiy’s standard block seal within the block header in order to keep slot identities simple.

To get a public key from the slot id, we use a hash-to-G1 function, which gives us public keys in G1 (the elliptic curve group we’re working with). That is, each slot implicitly has an identity, and by evaluating the id under the hash-to-G1 function, a public key in G1.

Claiming a Slot

When a block author claims a slot, they perform the extract algorithm of the IBE scheme, where they use their slot public key along with the master secret to calculate the slot secret (e.g. d = sQ where s is the master secret and is the public key). In order to do this, we introduce new functionality to the existing AuraAPI which allows slot authors to fetch the IBE public parameters, which are stored in the etf pallet, as well as read the master secret from local storage.

After calculating the slot secret, the slot author is tasked with preparing a DLEQ proof that the slot secret was calculated from the master secret. We accomplish this by implementing a trait which enables DLEQ proof and verification, using Arkworks. It allows the prover to demonstrate that, given some xG and xH, that both were calculated from x without revealing the value. In our scheme, one of the values is the slot secret, d = sQ, and the other is the master secret multiplied by the public param stored in the etf pallet. The DLEQ proof is then encoded within the block header when it is proposed by the author. That is, each block header contains a PreDigest which contains the slot id, the slot secret, and the DLEQ proof like so:

#![allow(unused)] fn main() { PreDigest: { slot: 'u64', secret: '[u8;48]', proof: '([u8;224])' } }

Importing and Verifying Blocks

When a block importer receives a new block, they first check that the slot is correct. If correct, then they recover the DLEQ proof from the block header and verify it along with the block seal (which is still a normal Schnorr signature). If the DLEQ proof is valid, then we know the slot secret is valid as well. If the proof is invalid, then the secret is incorrect and the block is rejected.

Consensus Error Types

Block producers and importers are given two new consensus error types here. For block producers, the InvalidIBESecret is called when the aura client cannot fetch a master IBE secret from local storage. For block importers, InvalidDLEQProof is triggered when a DLEQ proof cannot be verified. This is very similar in functionality to the BadSignature error type.

Encryption to the Future (the math)

Preliminaries

Identity Based Encryption

EtF uses the Boneh-Franklin IBE scheme with type 3 pairings. In brief, identity based encryption is a scheme were a message can be encrypted for an arbitrary string, rather than some specific public key. For example, a message could be encrypted for “bob@encryptme.com” so that only the owner of the identity “bob@encryptme.com” is able to decrypt the message. The BF-IBE consists of four PPT algorithms (Setup, Extract, Encrypt, Decrypt). In brief, these can be defined as:

- \(Setup(1^\lambda) \to (pp, mk)\) where \(\lambda\) is the security parameter, \(pp\) is the output (system) params and \(mk\) is the master key.

- \(Extract(mk, ID) \to sk_{ID}\) outputs the private key for \(ID\).

- \(Encrypt(pp, ID, m) \to ct\) outputs the ciphertext \(ct\) for any message \(m \in {0, 1}^*\).

- \(Decrypt(sk_{ID}, ct) \to m\) outputs the decrytped message \(m\)

DLEQ Proofs

EtF uses DLEQ proofs to verify the correctness of slot secrets without exposing the master secret for the IBE. In brief, the DLEQ proof allows a prover to construct a proof that two group elements were calculated from the same secret (e.g. xH and xG were calculated from the same x) without exposing the secret. Our scheme uses DLEQ proofs to leak an IBE slot secret \(d = sQ\) without exposing the master key, s.

The prover and verifier agree on two group generators, G and H and makes xG and xH publicly known. The goal is for the prover to demonstrate they know xG and xH were derived from the same x.

First, the prover:

- chooses a random \(r \in Z_p\) and calculates \(R1 = rG, R2 = rH\).

- calculates the challenge value with a hash function \(c = H(R1, R2, xG, xH)\)

- calculates the proof of knowledge \(s = r + c* x\)

- shares \((R1, R2, s)\)

With the agreed upon public parameters \(G, H\) and the proof \((R1, R2, s)\), a verifier can be convinced that the prover knows \(x\) without revealing it. The verifier:

- calculates the challenge value by hashing \(c = H(R1, R2, xG, xH)\)

- checks if \(c(xG) - sG == R1 && c(xH) - sH == R2\). If both equalities are true, the proof is valid. otherwise, it is invalid.

Timelock Encryption with ETF Network

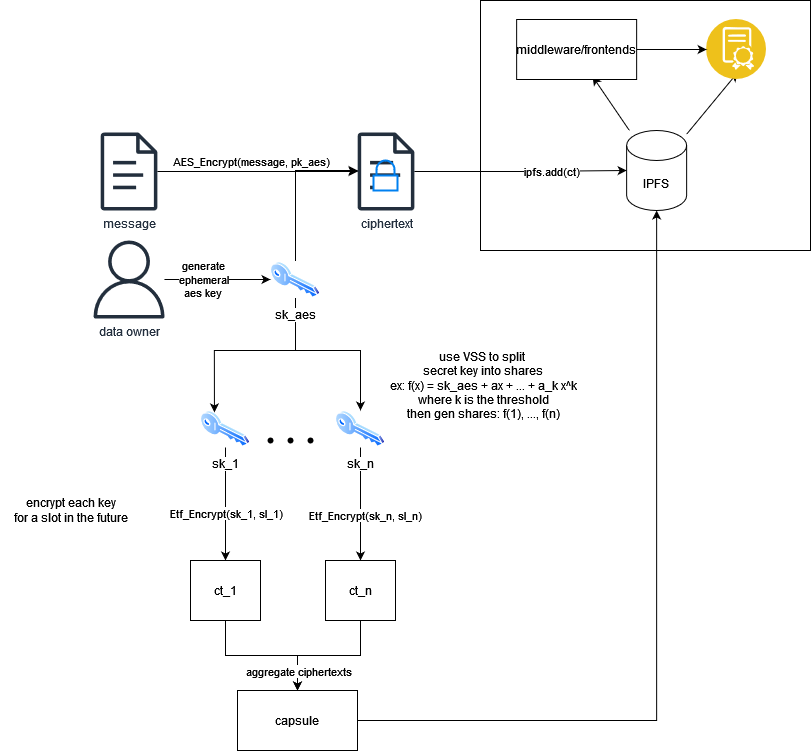

The encryption to the future (EtF) scheme consists of two PPT algorithms, \((Enc, Dec)\), such that:

- \( (f(x), nonce) \leftarrow Setup(k, n) \) for some k < n, and where f(x) is an n degree polynomial. the nonce is a 12 bit nonce for AES.

- \( (ct, capsule) \leftarrow Enc(m, {id_1, …, id_m}) \) for some slot identities \(sl_1, …, sl_m\) and a message \(m \in {0, 1}^*\). It outputs the AES ciphertext \(ct\) and IBE ciphertexts \( capsule = { ct_i = IBE.Encrypt(pp, id_i, f(i)) } \) where \(pp\) is the IBE public parameter.

- \(m’ \leftarrow Dec((ct, capsule), {sk_1, …, sk_m})\) where \(sk_i\) is the secret key leaked in the block authored in the slot with id \(id_i\).

By using IBE and DLEQ proofs, slot winners calculate a proof of knowledge of the master secret along with the derived slot secret, which they include in each block header. Block importers validate the DLEQ proof when importing blocks, ensuring that secrets are only included when they are provably valid.

Builders

A guide for building dapps on top of the etf network.

- ETF-SDK: A no-std, wasm-compatible rust library containing crypto primitives

- etf.js: A typescript library and wrapper around the etf-sdk wasm build. It enables interaction with the ETF Network as well as timelock encryption/decryption within the browser.

- Smart Contracts: The ETF Network supports smart contracts, as well as exposes a custom chain extension for verifying the existence of slot secrets within contracts.

- Host a Validator: Run a validator on an Ubuntu instance

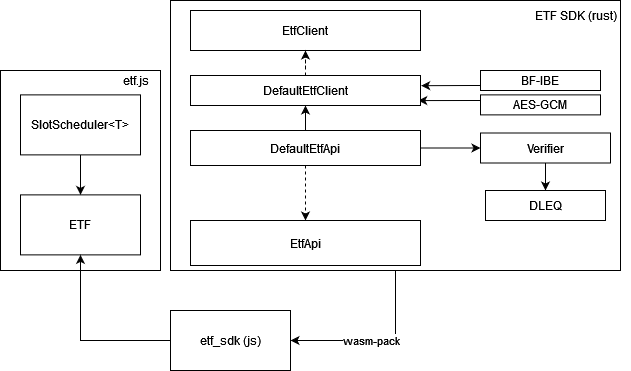

ETF SDK

The ETF SDK is the core of the modular tech stack for building protocols and apps on top of the ETF network.

Components

There are two main components that the SDK provides, the EtfClient and the EtfApi. Additionally, we will discuss slot schedules and the SlotScheduler.

EtfClient

The ETF Client is the core functionality of the SDK. The SDK implements functions to use the ETF network to encrypt and decrypt messages using IBE and timelock encryption via AES/IBE. The SDK is only one example of a capability which the ETF network enables (timelock encryption). The interface defines the method signatures required for our BF-IBE (which etf requires), however implementations have freedom to experiment. To implement it, implement the functions below:

#![allow(unused)] fn main() { fn encrypt( ibe_pp: Vec<u8>, p_pub: Vec<u8>, message: &[u8], ids: Vec<Vec<u8>>, t: u8, ) -> Result<AesIbeCt, ClientError>; fn decrypt( ibe_pp: Vec<u8>, ciphertext: Vec<u8>, nonce: Vec<u8>, capsule: Vec<Vec<u8>>, secrets: Vec<Vec<u8>>, ) -> Result<Vec<u8>, ClientError>; }

EtfApi

The EtfApi pulls together the EtfClient and DLEQ proof verifier (and potentially other verifiers in the future). It is both std and no-std compatible, meaning it plays nicely both with rust (e.g. to build a CLI) and also as a wasm build. The EtfApi implementation can be compiled to wasm and used along with etf.js to encrypt/decrypt messages.

We provide a default EtfApi implementation, which uses the DefaultEtfClient.

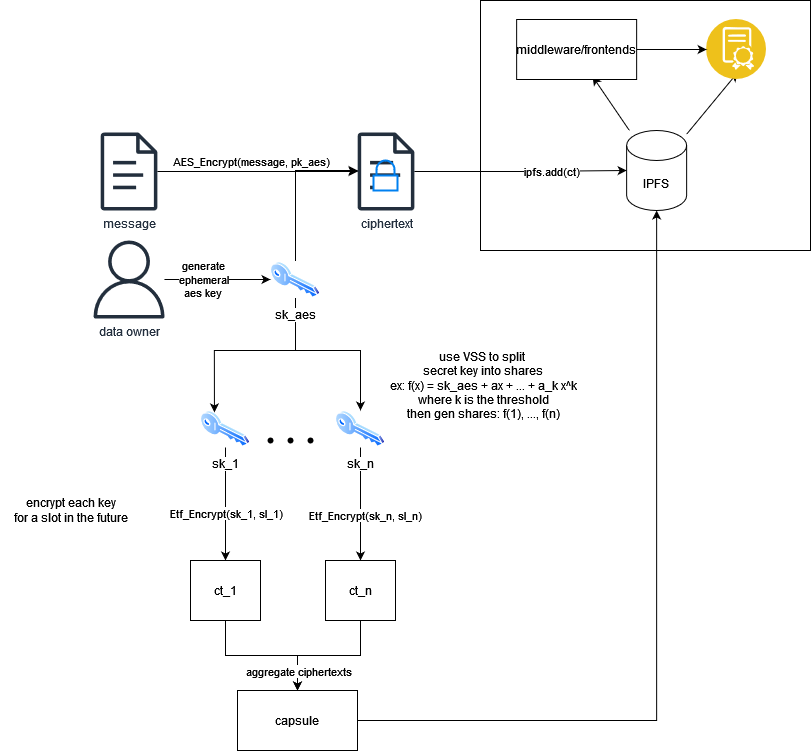

Timelock Encryption (and Decryption)

Encryption

The ETF SDK includes an implementation of the EtfClient and EtfApi, found at DefaultEtfClient and DefaultEtfApi. The client uses threshold secret sharing, AES-GCM (AEADS), and identity based encryption to generate aes secret keys and encrypt its shares to the future. In brief, it follows the diagram below. The output contains: aes_out = (AES ciphertext, AES nonce, AES secret key), capsule = (encrypted key shares), slot_schedule. The capsule contains the IBE encrypted key shares and the slot schedule are the slots for which they’re encrypted. It assumes the two lists are the same size and follow the same order.

Decryption

Decryption works in reverse. When at least a threshold of slots in the slot schedule have had blocks produced within them, the aes secret key can be reconstructed by decrypting the partially decryptable capsule (e.g. can decrypt 2 of 3 shares) and using lagrange interpolation to recover the master secret, which is then used for decryption.

Building apps with etf network

Building with the etf network is made easy through the etf.js library. The library is basically a wrapper around the wasm build of the etf-sdk. The library can be used both with a full node or by using the @ideallabs/smoldot lightclient. In addition, it emits an event with incoming block.

See the etf.js/examples for a working example on encrypting and decrypting with the sdk.

Light Client

Smoldot is a wasm-based light client that runs directly in the browser. Our smoldot implementation is a fork of the official one here. Our modifications are purely surroudning the expected headers and ensure they can be SCALE encoded/decoded. For now, in all other ways, everything is exactly the same as the official smoldot.

ETF.JS SDK

This is a javascript SDK to encrypt and decrypt messages with the ETF network. In particular, it lets users read slot secrets from the ETF network, encrypt messages to future slots, and decrypt from historical slots.

Installation

To use the library in your code, the latest published version can be installed from NPM with:

npm i @ideallabs/etf.js

Or, you can build the code with:

git clone git@github.com:ideal-lab5/etf.js.git

cd etf.js

# ensure typsecript is installed

npm i -g typsecript

# install dependencies

npm i

# build

tsc

Usage

The etf.js library can be run either with a full node or with a light client (in browser).

Connecting to a node

First fetch the chainspec and import it into your project

wget https://raw.githubusercontent.com/ideal-lab5/substrate/milestone3/etfTestSpecRaw.json

import chainSpec from './resources/etfTestSpecRaw.json'

import { Etf } from '@ideallabs/etf.js'

Full node

To connect to a full node, pass the address of the node’s rpc to the init function.

let ws = 'ws://localhost:9944'; // use wss://<host>:443 for a secure connection

let api = new Etf(ws)

await api.init(chainSpec)

Smoldot

To run with an in-browser light client (smoldot), the library is initalized with:

let api = new Etf()

await api.init(chainSpec)

This will start a smoldot light client in the browser, which will automatically start syncing with the network. With the current setup, this can take a significant amount of time to complete and we will address that soon.

:warning: Presently, there is a limitation to our smoldot implementation and it currently cannot be used to query smart contracts. We will resolve this problem shortly. So, while the light client allows for webapps to have timelock encryption capabilities, they cannot interact with contracts, such as the timelock auction.

Types

The API has an optional types parameter, which is a proxy to the polkadotjs types registry, allowing you to register custom types if desired. It also exposes the createType function.

// create custom types

const CustomTypes = {

Proposal: {

ciphertext: 'Vec<u8>',

nonce: 'Vec<u8>',

capsule: 'Vec<u8>',

commitment: 'Vec<u8>',

},

};

await api.init(chainSpec, CustomTypes)

api.createType('Proposal', data)

Timelock Encryption

Encryption

Messages can be encrypted by passing a number of shares, threshold, and some input to the slot scheduler implementation. In the default EtfClient, encryption uses AES-GCM alongside ETF. It uses TSS to generate key shares, which are encrypted for future slots based on the slot scheduler logic.

let message = "encrypt me!"

let threshold = 2

let slotSchedule = [282777621, 282777882, 282777982]

let seed = "random-seed"

let out = api.encrypt(message, threshold, slotSchedule, seed)

The output contains: aes_out = (AES ciphertext, AES nonce, AES secret key), capsule = (encrypted key shares), slot_schedule. The capsule contains the IBE encrypted key shares and the slot schedule are the slots for which they’re encrypted. It assumes the two lists are the same size and follow the same order.

Decryption

let m = await api.decrypt(ciphertext, nonce, capsule, slotSchedule)

let message = String.fromCharCode(...m)

Slot Scheduler

A slot schedule is simply a list of slots that you want to encrypt a message for. For example, a slot schedule could be [290871100, 290871105, 290871120]. In general, we can think of the slot schedule as being the ids input field to the encrypt function in the EtfApi. Along with the AES secret key produced by the DefaultApiClient, knowledge of the slot schedule along with the capsule (output from encryption) is enough information to recover the master key.

The SDK provides the SlotScheduler interface that can be implemented to create your own slot scheduling logic.

export interface SlotScheduler<T> {

generateSchedule(n: number, currentSlot: number, input: T): SlotSchedule;

}

By default, the SDK includes an implementation: the DistanceBasedSlotScheduler:

const slotScheduler = new DistanceBasedSlotScheduler()

let slotSchedule = slotScheduler.generateSchedule({

slotAmount: shares,

currentSlot: parseInt(latestSlot.slot.replaceAll(",", "")),

distance: distance,

})

Events

The Etf client subscribes to new block headers and emits a “blockHeader” event each time a new header is seen. To hook into this, setup an even listener and fetch the latest known slot secret:

// listen for blockHeader events

const [slotSecrets, setSlotSecrets] = []

document.addEventListener('blockHeader', () => {

console.log(api.latestSlot)

})

Smart Contracts

The ETF network supports ink! smart contracts via Substrate’s pallet-contracts.

Chain Extension and ETF Environment

The ETF Network uses a custom chain extension to allow smart contracts to check if a slot is in the future or in the past. The chain extension allows you to check if a block has been authored in a given slot, which is very useful in cases where it is important to know if data can be decrypted. The custom environment can be configured in ink! smart contracts to call the chain extension exposed by the ETF network runtime.

See the auction orchestrator for an example.

ETF Environment setup

- Add the dependency to your contract

etf-chain-extension = { version = "0.1.0, default-features = false, features = ["ink-as-dependency"] }

- Configure the environment in your contract

#![allow(unused)] fn main() { use etf_chain_extension::ext::EtfEnvironment; #[ink::contract(env = EtfEnvironment)] mod your_smart_contract { use crate::EtfEnvironment; ... } }

- Query the runtime to check if a block has been authored in the slot.

#![allow(unused)] fn main() { self.env() .extension() .check_slot(deadline) }

Ubuntu Full node setup

:warning: This is a WIP.

This is a guide on how to setup a full validator node on an ubuntu instance.

This will walk through:

- Installing dependencies, chain spec, and node (TODO)

- Setting up the node as a system service (In progress)

- configuring proxies (nginx) and certs (lets encrypt) for the websocket connections (TODO)

Some of this information can apply to any substrate based node, not only etf network nodes.

Setup system service

mkdir /etf

cd /etf

wget https://raw.githubusercontent.com/ideal-lab5/substrate/milestone3/etfTestSpecRaw.json

sudo nano etf.service

Then configure the file:

[Unit]

Description=ETF Bootstrap Node

After=docker.service

Requires=docker.service

[Service]

TimeoutStartSec=0

ExecStartPre=-/usr/bin/docker stop %n

ExecStartPre=-/usr/bin/docker rm %n

ExecStartPre=/usr/bin/docker pull ideallabs/etf

ExecStart=/usr/bin/docker run --rm -v /home/ubuntu/etf/etfTestSpecRaw.json:/data/chainspec.json -p 9944:9944 -p 30333:30333 -p 9615:9615 ideallabs/etf --chain=/data/chainspec.json [add your params]

[Install]

WantedBy=multi-user.target

Replace the path to the chainspec with the absolute path of wherever you stored the file. Also replace [add your params] with whatever is desired, for example, if you wanted to run a full “Alice” validator exposed as a bootstrap node, you could run with:

--alice --unsafe-rpc-external --rpc-cors all \

--node-key 0000000000000000000000000000000000000000000000000000000000000001 \

--listen-addr /ip4/0.0.0.0/tcp/30332 --listen-addr /ip4/0.0.0.0/tcp/30333/ws \

--prometheus-external

Store the file as etf.service and create a symlink in the systemd service directory and enable the service:

cd /etc/systemd/system/

# create a symlink

sudo ln -s ~/etf/etf.service .

# enable the service

sudo systemctl enable etf.service

Then use it as any other system service:

sudo systemctl [start | stop | status] etf

Timelock Auction

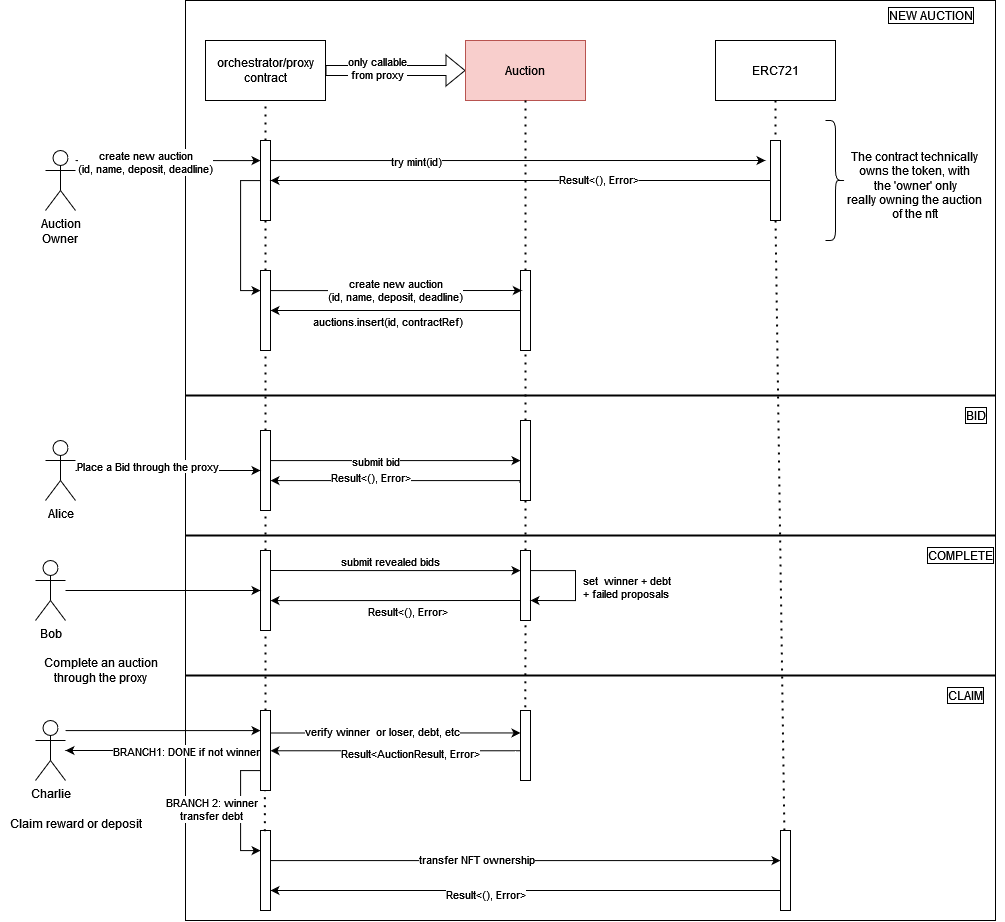

The timelock auction contract is a Vickrey auction, or sealed-bid second-price auction (SBSPA), enabled with timelock encryption via the ETF network. In a Vickrey auction, the highest bidder wins but the price paid is the second highest bid. Using timelock encryption enables a non-interactive winner selection for the auction, where all bids can be revealed with no interaction from the accounts that proposed them.

How It Works

There are four phases to the auction. The idea is that an auction contains a deadline, a slot in the future. Bids are encrypted for the future slot offchain, using the etf.js SDK, and published in the contract along with a commitment to their bid (sha256 hash). Once the slot secret is revealed at the deadline, bidding closes and the auction can be completed. The ciphertexts must then be downloaded and decrypted offchain. Then, for each decrypted bid, the contracts calculates the hash and ensures it matches the hash provided by the bidder. Winner selection logic chooses the highest bidder as the winner.

Setup

First, the auction owner specifies the auction item and deadline slot, ((asset_id, amount), deadline, min_bid) and deploys the auction. Then, the auction owner starts the auction by transferring ownership of the auction items to the contract.

Bidding

The bidding phase starts as soon as the auction is deployed. Here, each participant

-

determines a bid, \(b \geq bid_{min}\)

-

calculates a sha256 hash \( c = Sha256(b) \)

-

generates the ciphertext:

\((ct, nonce, capsule) \leftarrow ETF.Enc(P, P_{pub}, b, deadline) \)

-

publish the ciphertext and hash: \(((ct, nonce, capsule), hash)\) as a signed transaction to the smart contract

Notes:

- in the future, this can be modified to include a zk state proof that you’ve reserved the amount that was hashed to get \(c\). This would be verified when selecting a winner.

Non-Interactive Reveal and Winner Selection

As stated above, the main allure of this approach is to make the bid reveal and winner selection non-interactive, while retaining full decentralization. For now, this is not a zero-knowledge protocol, though through some tweaks it could be made into one.

The auction can be completed after the deadline passes. There are two parts to this phase, offchain decryption and onchain verification.

OFFCHAIN: download and decrypt all published ciphertext to recover the bids. Bids are recovered as follows:

- \(sk \leftarrow ETF.Extract(slot) \) to retrieve the slot secret from the block in the given slot

- for each published \((who, (ct, nonce, capsule), hash)\), where \(who\) is the account that published the data, decrypt the ciphertext \(b’ \leftarrow ETF.Dec(ct, nonce, capsule, sk)\)

- complete the auction by publishing a map of account to decrypted bids, \((who_i, b’_i)\)

- note: we must ensure ciphertext integrity when publishing it (i.e. a MAC)

ONCHAIN: the contract verifies each provided, revealed bid and then selects a winner.

- track the

- for each \(who_i, b’_i\):

- fetch the hash published by \(who_i\), \(c_i\)

- calculate \(c’_i := Sha256(b’_i)\)

- check if \(c’_i = c_i\).

- If not, then the revealed bid is invalid and the function returns an error.

- If it is valid, store the bid and continue

- choose the winner as the first highest-bidder.

Post-Auction

After the winner is selected, participants can call the auction contract to either:

- claim the prize if you are the winner

- reclaim your deposit if you are not the winner

Immediately post-auction, a countdown starts where the winner can claim the prize. The countdown can be configured when deploying the contract, and is a future slot number. When claiming the prize, the winner pays the second-highest bid. When the balance transfer is successful, the contract transfers ownership of the assets to the winner. If the winner doesn’t claim the prize before the deadline, the next highest-bidder is selected as the new winner, and the countdown restarts. This can be done until the entire list of participants is exhausted if desired.

Future Work

- currently, there is no relationship between the deposit, i.e.the collateral, which backs a bid.

- there is no negative impact to participants who issue invalid bids. We intend to address this in the future, but that is out of scope for this doc.